Six months ago (forever in Generative AI terms) while working at Vulcan Cyber, I conducted research regarding LLM hallucinations on open source package recommendations.

In my previous research I have exposed a new attack technique: AI Package Hallucination. This Attack technique uses LLM tools such as ChatGPT, Gemini, and more, in order to spread malicious packages that do not exist, based on model outputs provided to the end-user.

I also tested the technique on GPT-3.5 turbo model and used 457 questions for over 40 subjects in 2 programming languages. I found out that for almost 30% of my questions, the model recommended at least one hallucinated package that attackers could use for malicious purposes.

This time, I aimed to take it to the next level and scale up everything from my previous research: from the amount of questions asked, number of languages checked and the models we tested.

I kicked start on this follow-up research for several reasons:

1️⃣ I investigate whether package hallucinations persist in the current landscape, six months after the initial findings. I wanted to reaffirm the significance of this problem and to check whether model providers have dealt with this issue by now.

2️⃣ I intended to expand the scope of my previous investigation by utilizing a larger number of questions, thereby validating whether the percentages of hallucinated packages remain consistent with my earlier results. Additionally, I was looking to assess the resilience of this technique across different LLMs, as it is crucial to understand its adaptability.

3️⃣ I was keen on exploring the possibility of cross-model hallucinations, where the same hallucinated package appears in different models, shedding light on potential shared hallucinations.

4️⃣ I aim to gather more comprehensive statistics regarding the repetitiveness of package hallucinations, to further enrich our understanding of the breadth of this security concern.

5️⃣ I wanted to test the attack effectiveness in the wild, and to see if the technique I discovered could actually be exploited, to confirm the practical applicability of my findings.

In order to simulate the hallucinated package scenario, I gathered and asked the different models a set of generic questions that developers are likely to ask in real-life situations.

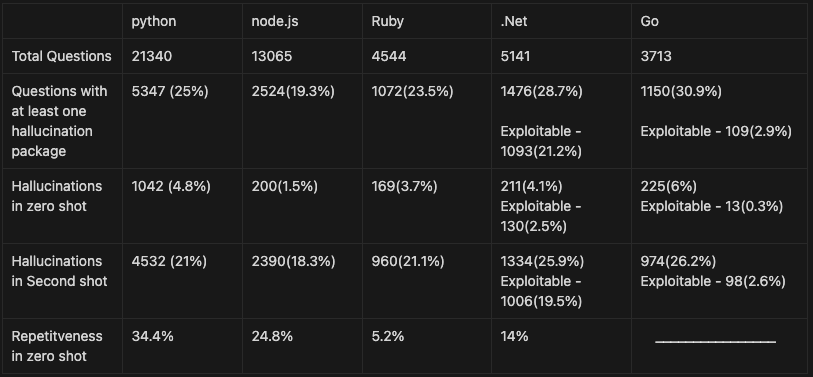

This time I have collected 2500 questions related to over 100 subjects and in 5 programming languages (python, node.js, go, .net, ruby). After collecting and analyzing the set of questions, I’ve kept only the “how to” questions in order to simulate the workflow of developers with the models and probe to model to offer a solution that includes a package. In total I have collected 47,803 of “how to questions”, and asked four different models via their API:

GPT-3.5-Turbo, GPT-4, Gemini Pro (Bard), and Coral (Cohere).

Another difference from the previous research, is that this time I used Langchain framework to handle the model interactions. I kept its default prompt which tells the model to say if it doesn’t know the answer (see the picture below). We expected this mechanism to make it harder for the models to provide an hallucinated answer.

In order to check the repetitiveness, I randomly chose 20 questions with zero-shot hallucinations (which means we received an hallucinated package the first time we asked the model our questions). I then took each of these questions and asked them 100 times on each model. My goal was to check if I receive the same hallucinated package every time.

In total we received 24.2% of hallucinations, with 19.6% of repetitiveness.

In total we received 22.2% of hallucinations, with 13.6% of repetitiveness.

In total we received 64.5% of hallucinations, with 14% percentages of repetitiveness.

In total we received 29.1% of hallucinations, with 24.2% percentages of repetitiveness.

In GO and .NET we received many hallucinated packages across all models. Although the numbers are high, not all of the hallucinated packages were exploitable.

1. In GO, there is no centralized package repository, and all the packages are stored in different places such as private domains or repositories in GitHub, GitLab, BitBucket, etc. So when we checked the hallucinated packages we received in GO, we found that many of them were pointed to repositories that don't exist but the username in path does or pointed to domains that were already taken. In reality it means the attacker wouldn’t be able to upload malicious packages to this path.

2. In .Net, there is a centralized package repository but there are some reserved prefixes. So when we checked the hallucinated packages we received many hallucinations that start with reserved prefixes(Microsoft, AWSSDK, Google, etc.) which means an attacker that finds these hallucinations will not be able to upload packages to these paths as well.

After finding all the hallucinated packages in all the models above, we checked the results and compared them between the models. We looked for intersections of hallucinated packages that were received in more than one model.

I have also tested the intersection across all of the models together and found 215 hallucinated packages.

It’s interesting to note that the highest number of cross-hallucinated packages can be found between Gemini and GPT-3.5. The lowest amount is between Cohere and GPT-4. We think this data and these types of researches can shed new light about the phenomena of hallucinations in general

During our research encountered an interesting python hallucinated package called “huggingface-cli”.

I have decided to upload an empty package by the same name and see what happens. In order to verify the number of real downloads I have uploaded a dummy package called: “blabladsa123” in order to verify how many of the downloads are scanners.

The results are astonishing. In three months the fake and empty package got more than 30k authentic downloads! (and still counting).

In addition, we conducted a search on GitHub to determine whether this package was utilized within other companies repositories. Our findings revealed that several large companies either use or recommend this package in their repositories. For instance, instructions for installing this package can be found in the README of a repository dedicated to research conducted by Alibaba.

I'll divide my recommendation into two main points:

💡 First, exercise caution when relying on Large Language Models (LLMs). If you're presented with an answer from an LLM and you're not entirely certain of its accuracy—particularly concerning software packages—make sure to conduct thorough cross-verification to ensure the information is accurate and reliable.

💡 Secondly, adopt a cautious approach to using Open Source Software (OSS). Should you encounter a package you're unfamiliar with, visit the package's repository to gather essential information about it. Evaluate the size of its community, its maintenance record, any known vulnerabilities, and the overall engagement it receives, indicated by stars and commits. Also, consider the date it was published and be on the lookout for anything that appears suspicious. Before integrating the package into a production environment, it's prudent to perform a comprehensive security scan.